Scott Guthrie recently gave a shout-out to the IIS SEO Toolkit on his blog. I don’t know about you, but something about SEO always brings up thoughts of greasy salesmen and used cars. Best purge those preconceptions from your mind, though, cause SEO is important.

Even excepting SEO, the SEO Toolkit is a great way to find other issues with a web site. For example, large content files (such as images, which can probably be shrunk down), duplicate keywords, missing meta information, missing content, and other information will all be revealed once you run the Toolkit against your site.

Scott gives a nice overview of the tool; I’ll let his discussion get you started and jump right into what the tool showed me.

SEO Toolkit Analysis

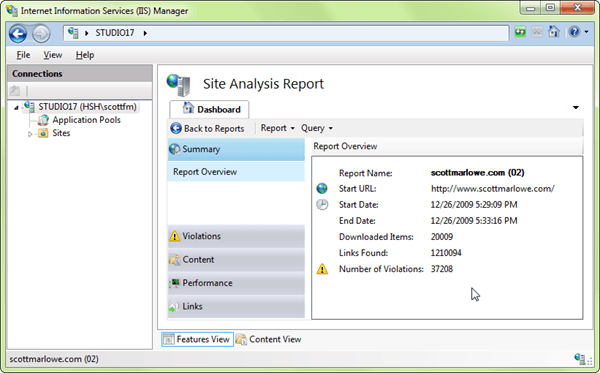

Upon running your first analysis you’ll get something like this (I ran the analysis against my fiction site, scottmarlowe.com):

The statistic I’m most concerned with is the “Number of Violations”. I’ve got over 37,000 of them!

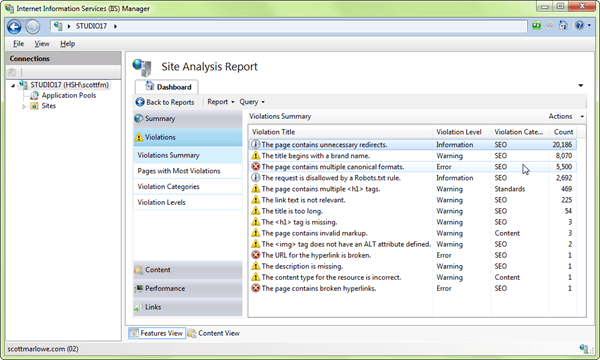

Turns out it’s not as bad as it may seem, or not as difficult to whittle that number down anyway. Here is the breakdown of violations:

Let’s take a look at the big ones (the ones with the highest counts).

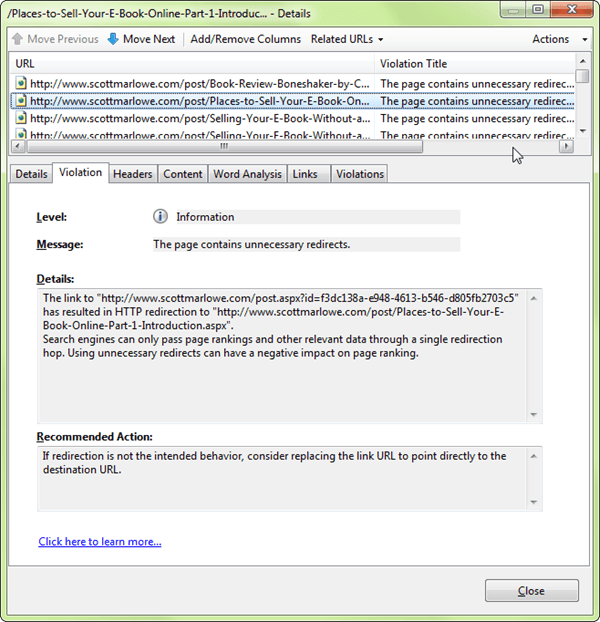

1. The page contains unnecessary redirects

Turns out most of these are of this variety:

The first link it is indicating is a permalink for a blog post that looks like this:

<a rel=”bookmark” href=”http://www.scottmarlowe.com/post.aspx?id=f3dc138a-e948-4613-b546-d805fb2703c5″>Permalink</a>

This forum post suggests a quick fix for this:

For this particular URL I would consider adding a “nofollow” to the link…

I use BlogEngine.NET; the permalinks were created with “rel=bookmark” already. I couldn’t find a whole lot more than this as far as what “bookmark” buys me:

Bookmark Refers to a bookmark. A bookmark is a link to a key entry point within an extended document. The title attribute may be used, for example, to label the bookmark. Note that several bookmarks may be defined in each document.

But just in case, I also learned that you can specify multiple “rel” tokens just by putting some whitespace between them:

<a rel=”bookmark nofollow” href=”http://www.scottmarlowe.com/post.aspx?id=f3dc138a-e948-4613-b546-d805fb2703c5″>Permalink</a>

That should do it for that violation.

2. The title begins with a brand name

Here are the details:

Search engines often parse text so that words that appear earlier in a sentence are weighted higher than words that appear near the end of a sentence. Page relevancy is calculated by the use of important keywords that describe the page content. A page about a specific topic should use a keyword related to that topic at the beginning of the <title> tag instead of using a site name or brand name (“scottmarlowe”), because those do not describe the contents on the page.

This one was easy to fix. I’d been using the format “scottmarlowe.com – <page name>” as the title of my document (web page), so based on the recommendation I simply flipped the title so it reads “<page name> – scottmarlowe.com”.

3. The page contains multiple canonical formats

A good discussion of this particular violation can be found on the CarlosAg Blog in the post Canonical Formats and Query Strings – IIS SEO Toolkit. The details for one of the violations of this type from my Toolkit analysis reads:

The page with URL “http://www.scottmarlowe.com/category/Book-Reviews.aspx” can also be accessed by using URL “http://www.scottmarlowe.com/category/Book-Reviews.aspx?page=1”.

Search engines identify unique pages by using URLs. When a single page can be accessed by using any one of multiple URLs, a search engine assumes that there are multiple unique pages. Use a single URL to reference a page to prevent dilution of page relevance. You can prevent dilution by following a standard URL format.

I saw two ways of dealing with this violation:

a. Add a Disallow in robots.txt

Add the following to your robots.txt:

Disallow: /*?

This will prevent search bots from using other avenues to access a page they would have already indexed via the main url. This is the approach I took.

b. Add a <link> with rel=”canonical”

Add the following for each link:

<link rel=”canonical” href=”http://www.my-site.com/my-canonical-url” />

Note that this is new and may not be supported by all search engines.

4. The request is disallowed by a Robots.txt rule

This one was informational, and, in fact, was a non-issue as the pages excluded from crawling by my robots.txt were not supposed to be crawled.

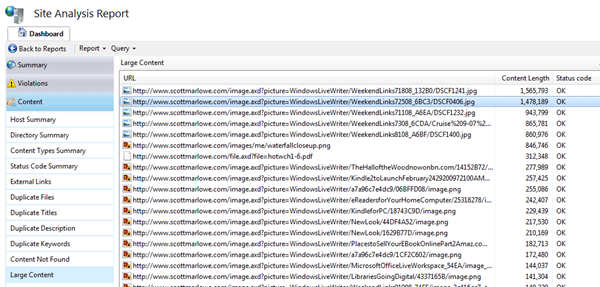

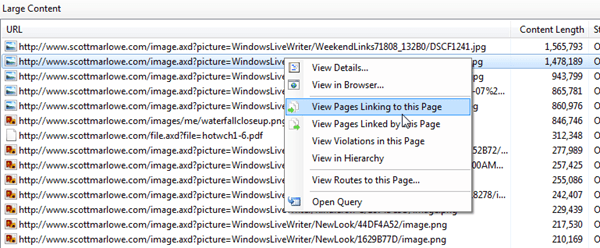

5. Large Content Files

The SEO Toolkit does a nice job of displaying your largest files:

In my case, I had a few image files that were part of different blog posts that were shrunk when displayed but were, in fact, quite large. A lot of bandwidth was being wasted downloading the oversized images before they were scaled down and rendered on the client machine. Identifying the pages where those images were being used was pretty easy. Just right-click on the image in question and select “View Pages Linking to this Page”:

I realized I could live without the images on those particular posts so I simply deleted them from the pages where they were being used.

6. Duplicate Description

This turned out to be a programming problem where many pages were having the meta description set twice. I corrected the code and hope to not see the violation again the next time I run the analysis.

Some oddities

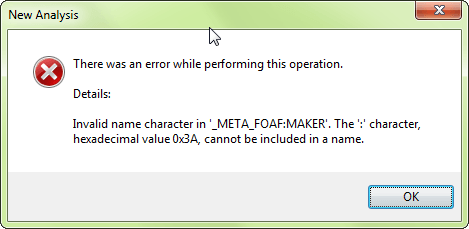

The SEO Toolkit does have some known problems. After running my fifth or so analysis I all of a sudden starting seeing the error “Invalid name character… The ‘:’ character, hexadecimal value 0x3A, cannot be included in a name”:

Turns out this is a known mishandling of meta information by the Toolkit and that there should be a fix released sometime soon. I’ll update this post once they do.

Unfortunately this error prevented me from running any more analysis of my site, so I’ll have to wait until the fix is released to verify if I’ve fixed the major violations.